Heterogeneous data fusion for continuous patient stratification

This work was done by my PhD student Nathan Painchaud in co-supervision with Pr. Pierre-Marc Jodoin at the university of Sherbrooke and expert in AI.

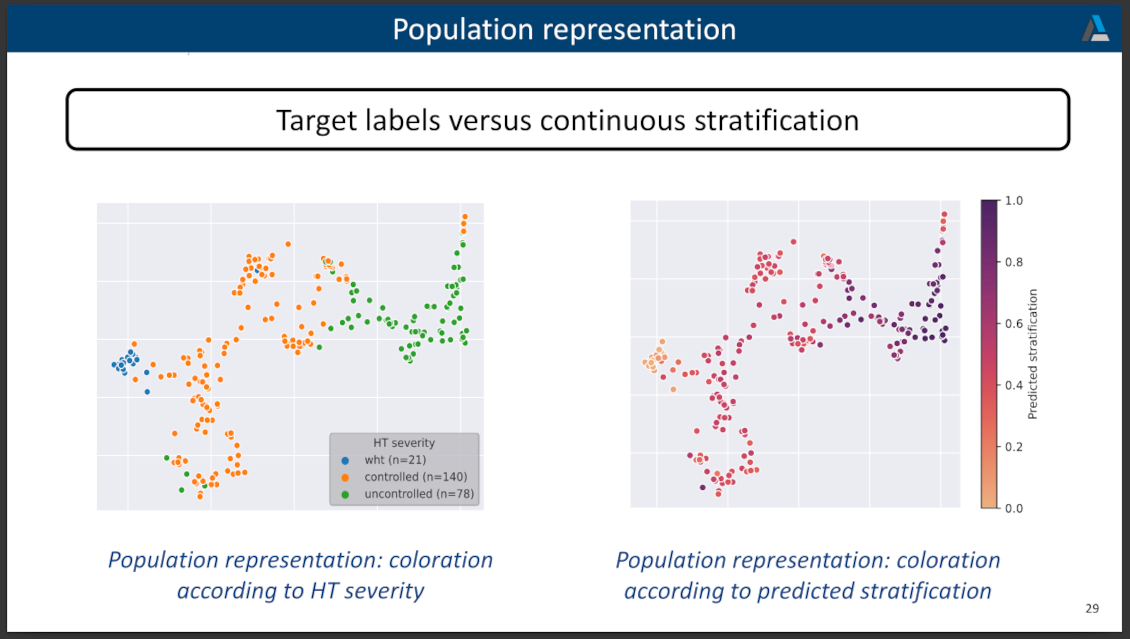

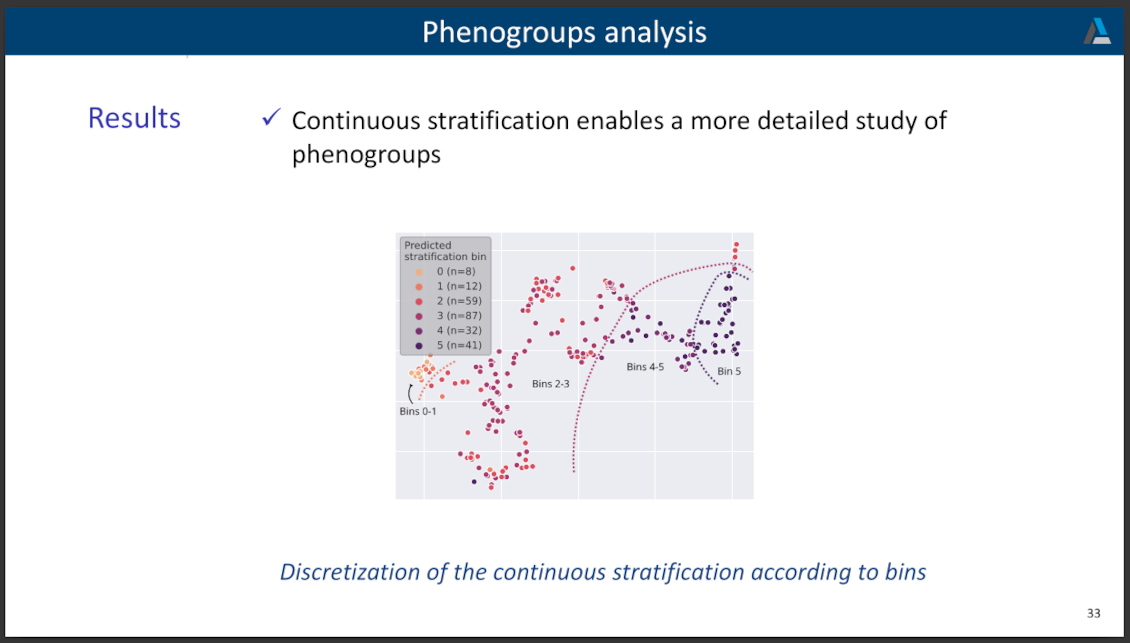

We developed a transformer-based approach to efficiently merge information extracted from echocardiographic image sequences and data from electronic health records to learn the continuous representation of patients with hypertension. Our method first projects each variable into its own representation space using modality-specific approaches. These standardized representations of multimodal data are then fed to a transformer encoder, which learns to merge them into a comprehensive representation of the patient through a fine-tuning task of predicting a clinical rating. This fine-tuning task is formulated as an ordinal classification to enforce a pathological continuum in the representation space.

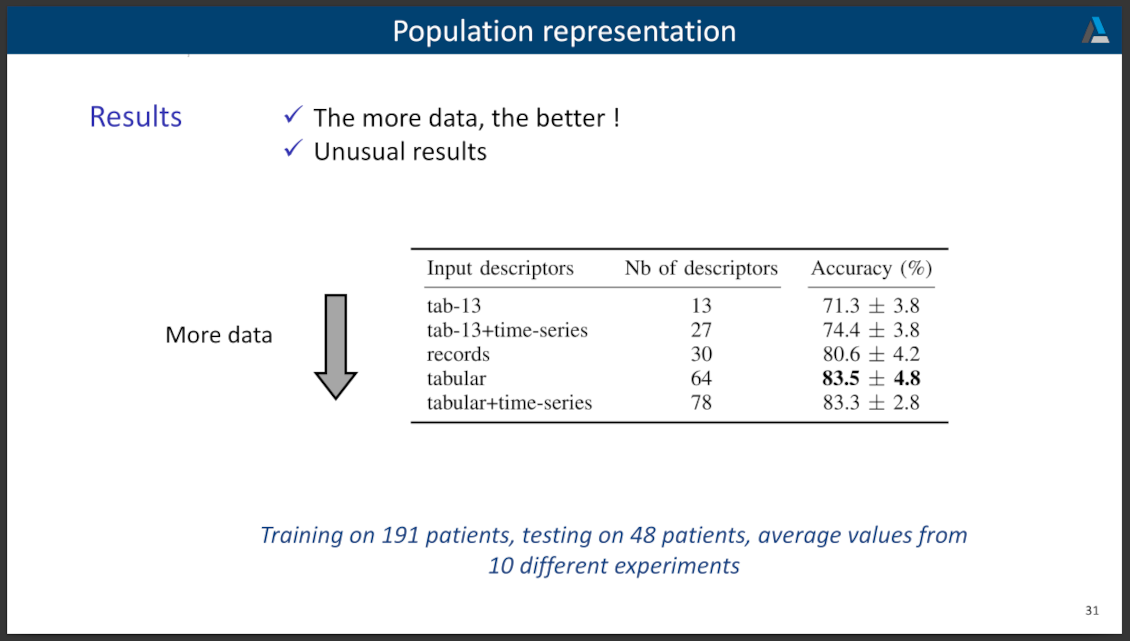

We observe the major trends along this continuum for a cohort of 239 hypertensive patients to describe, with unprecedented gradation, the effect of hypertension on a number of cardiac function descriptors. Our analysis shows that i) pretrained weights from a foundation model allow to reach good performance (83% accuracy) even with limited data (less than 200 training samples), ii) trends across the population are reproducible between trainings, and iii) for descriptors whose interactions with hypertension are well documented, patterns are consistent with prior physiological knowledge.